Major Update: Private AI Recs

Life is full of nuance. Which is why I made a terrible life choice when I became a content creator on the internet who discusses complex, often-political topics.

I've added an “AI” section to The New Oil that suggests more privacy-focused large-language models (LLMs) that users could consider if they have need of an AI. Obviously I think this is a good addition to the site or else I wouldn't have done it. But for those who may be on the fence – or upset – let me explain why.

AI Is Coming Whether We Like It or Not

Last week, Proton introduced their new AI chatbot, Lumo. In the announcement, Proton stated that “whether we like it or not, AI is here to stay.” I believe this is true. Meanwhile, over on Reddit, someone replied to the announcement by saying that “AI is only here to stay because companies keep shoving it out faces saying YOU WANT THIS.” I believe this is also true.

Holding two conflicting truths like this seems to be one of the weakest skills of most internet users, but unfortunately it's vital to navigating a complex world of gray areas and nuance. In this case, both of these things are true. AI is – I believe – absolutely 100% a bubble. Oh sure, it has uses. AI has helped me workshop ideas for content, mention talking points I otherwise overlooked, explain difficult concepts I want to understand, and it has troubleshot so many server errors for me.

So, so many server errors. That were completely my fault.

Anyways, the point is that AI does have use cases. To say that all companies need to do to win is force something down our throats isn't entirely true. Google is a wasteland of failed attempts to force new ideas on us such as Glass. We can all enjoy a collective snicker at the Metaverse. And most of you probably already forgot about when Quibi had a commercial every ten seconds. Marketing execs wish it was as easy to sell a product as simply pumping a ton of money into ads and telling you to shut up and accept it.

AI is spreading because it does have actual use cases. Zoom's AI note-taking feature is unbelievably good. The ability to ask it to brainstorm side dishes for meals is a godsend. And you guys may not have realized it, but we use AI (technically) to generate subtitles for the podcast, as well as to smooth out many of the jump cuts. (And my god the subtitles are impressive.)

AI is being foisted upon us – against our will. This is true, and I don't want to invalidate or diminish that (more on that at the end). But that's not the only explanation for why it's catching on. Much like the internet and apps, it's spreading because people see value in it. Too much imagined value, in many cases, but there's almost always a kernel of truth under any exaggeration.

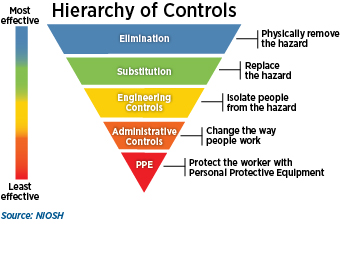

We Need Privacy-Competitive Options

In that spirit, Proton isn't wrong. If the privacy community washes our hands of AI and says “screw that thing” then we risk leaving users out in the cold. It is widely acknowledged in the privacy community (especially more pedantic circles) that email is fundamentally broken. It'd be nice if we could replace it with something more secure. Still, email persists, and that's why I strongly believe everyone should be using an encrypted email provider in order to mitigate the damages. This is a strategy called “harm reduction,” and it's proven incredibly effective in substance abuse efforts. It's not always easy – or feasible – to simply say “do without this thing.” In those cases, it's still helpful to say “here's how to make the thing less dangerous.” In OSHA, they call it “Hazard Prevention.” (Honestly, this would make a great blog post potentially, but for now let's just say “the privacy community would do well to adopt this model” and move on.)

There are, as I noted, many valid use-cases for AI. Just as our calls for people to #DeleteFacebook and switch to Linux have gone largely unheeded, so too will our calls for people to reject AI. Truth is often painful. Our messaging – as a community – needs work (probably cause we insist on total perfection to the point of alienating intrigued newbies, but that's a blog for another time). It's taken years of ignored warnings and tone-deaf pleas for privacy to become even remotely mainstream – not because of us but despite us.

I want to make it clear that I do think it's always worth standing up for privacy, especially early on. Sometimes we win, and those glorious victories and can shape the world for decades to come. But sometimes we don't get those clear and decisive victories, and when we realize that we're losing, sometimes we can gain more ground by learning to shift strategies rather than double down. The tree that doesn't bend in the hurricane often breaks instead.

I hate to be defeatist, but when it comes to AI, the writing is on the wall. We're not gonna win this one. The enemy troops are already cleaning up the bodies. We can either dig our heels in petulantly, or we can offer a compromise – a compromise in the form of AIs that are less sh*tty, whether that means privacy-preserving, trained on more ethical data, or more energy efficient. If we can't find those compromises, then we're leaving the masses to the abuses of Big Tech without anything to soften the blow.

But AI Remains Fundamentally Broken

All that said, I want to really drive home the point that I am not an AI maxi. I think there's some use cases for AI. There's even some stuff it does well. But that doesn't mean I want AI pumped into everything. AI is absolutely a bubble, and maybe once it bursts we can stop beating it to death and start figuring out where it actually improves our lives and (hopefully) junk the rest of it.

In the meantime, I also want to make it clear that I'm not trying to encourage everyone to use AI. There are so many perfectly valid reasons to avoid AI. As I said, there's massive, valid concerns with the training data, the compensation (or lack thereof) to the people who created that data, the strain AI scrapers are putting on the infrastructure of smaller projects, and the carbon footprint. If you don't like AI or have no use for it in your life, I 100% support your decision not to use it.

In the context of AI, I don't think “harm reduction” has to stop at “private AI proxies.” We can continue to push our legislators to hold AI companies accountable for licenses on the data they train on. We can applaud (and give feedback on) efforts by organizations like Creative Commons to create an ethical framework for AI to exist and compensate the sources of data they train from accordingly. We can push for better energy solutions. And of course, I do think that people who wish to opt-out entirely – from contributing data (knowingly or not) or from using it themselves – should retain that right.

To the Future

The primary core principles of harm reduction – the actual illicit drug program – include things like “accepting that drug use is part of our world,” “understanding drug use as a complex, multi-faceted phenomenon,” and “not minimizing or ignoring the real and tragic harm associated with drug use.” These can all be applied to AI as well. Two things can be real. AI can be here to stay, while also presenting incredible risks that need to be addressed and fixed. Tools like Lumo, Duck.ai, GPT4All, and others are part of a multi-pronged approach to do just that. They're not perfect, they're not ideal, but they give users far better options than the default. The principles of harm reduction are something that the privacy community would do well to start adopting more often across other tools, lest we continue to hold ourselves back from making progress. Some progress is better than none.

Tech changes fast, so be sure to check TheNewOil.org for the latest recommendations on tools, services, settings, and more. You can find our other content across the web here or support our work in a variety of ways here. You can also leave a comment on this post here: Discuss...